Utopian Disorder

Monday, June 2, 2014

Monday, August 8, 2011

Neat explanation of Hyperthreading concept

I came about an neat, brief explanation of Hyperthreading in the Apple MacBook Air review here (host: arstechnica.com) by Iljitsch van Beijnum

Hyperthreading

Twenty years ago, CPUs were nice and simple. They executed instructions that were read from memory one at a time. Life was good, but not particularly fast. Then CPUs became superscalar and started executing more than one instruction at a time. (See this classic piece from Ars cofounder Jon Stokes for background.)

It's easier to do multiple things in parallel than to do one thing faster, which is also why today's CPUs have multiple cores. Each core is basically a fully formed CPU of its own, although both CPU cores still have to share a single connection to the outside world. But as long as memory bandwidth isn't an issue, each CPU core can run at full speed regardless of what the other(s) are doing. So as long as the software manages to split up the work that must be done into pieces that can be executed fairly independently by different cores, having multiple cores pretty much always improves performance.

This is not exactly the case with hyperthreading. To the software, a CPU like the i5 or i7, which has two cores that can each run two (hyper)threads, looks the same as a four-core CPU. But in reality, there's only two cores worth of execution units that do the actual work. What the hyperthreading does is feed the execution units instructions from two different software threads, so that if one thread doesn't use the full capacity of the CPU core at a given point, the execution units are kept busy with instructions from the other thread.

It's a bit like a print shop with several printers. Let's say there are two black-and-white printers and one color printer. A computer analyzes PDF files that need to be printed 20 pages or so at a time, and sends the color pages to the color printer and the black-and-white pages to the two other printers. Having multiple cores is like setting up another computer and another three printers. That's going to double the output—if the guy that has to fill up the paper trays can keep up—but it doesn't improve efficiency. If there's 50 pages of color followed by 50 pages of black text, that still means that at any given point, at least one printer is doing nothing.

By adding a second computer, but no extra printers, it's possible to process two PDF files at the same time, so maybe while one computer is sending only color pages, the other is sending black-and-white pages. In that case you get double the performance without doubling the number of execution units. But in practice, the gain is much smaller because the needs of the multiple threads will overlap a good deal.

Unfortunately, there doesn't seem to be any way to turn off hyperthreading to see how well the system performs with and without it. So instead, I ran an old command line based, single threaded benchmark:NBench. First I ran one copy of the program, then two at the same time, and then four. In a normal dual core system, you'd expect code that isn't limited by memory bandwidth to run at the same speed speed regardless of whether there's another copy of the same code also running on the other core. However, the Core i5 and i7 CPUs have a feature called Turbo Boost. If only one core is active, this core gets to run faster than normal, keeping the overall heat output of the CPU within normal limits. Actually, the 1.8GHz Core i7 in the MacBook Air gets to run faster than advertised all the time.

If you look at the kernel.log file in /var/log, you'll see this cryptic message:

AppleIntelCPUPowerManagement: Turbo Ratios 008B

This means if four CPU cores are running, the extra speed is 0. The same if three cores are running. With two active cores the extra speed is 8, and with only one core active, it's B (which means 11). The extra speed is added in steps of 100MHz. Our ultra low voltage (ULV) Core i7 being a dual core CPU, there are never any third or fourth cores active, so we always get 800MHz of extra CPU power. So this is really a 2.6GHz CPU, with Intel being surprisingly modest in its marketing. If only one CPU core is active, the clock speed is increased to 2.9GHz.

Back to our testing. NBench runs ten different tests. With one copy of the program running all by itself, the extra clock cycles made the different tests between 10 and 19 percent faster compared to the results where a second copy of the program was running at the same time. It looks like the penalty for not being multithreaded isn't as severe as it was on previous multicore architectures.

I then ran four copies of the benchmark at the same time. Hyperthreading helped a lot here; four copies of the program were able to execute between 20 and 55 percent more iterations per second compared to two copies.

Hyperthreading problems

This is not the whole story, however. Let's go back to our print shop: what if the two print jobs use completely different fonts? Worst case is that the printer can't keep all the necessary fonts in memory, so each time it gets a page from the other print job, it has to load new fonts into memory, kicking out the previously loaded fonts. Now, printing two jobs at the same time is actually slower than printing them one at a time. Hyperthreading can lead to worse performance than just using one thread per core if the different threads need to access different parts of RAM, leading to cache thrashing.

For this reason, many people turned off hyperthreading in the past, but it looks like Intel's current implementation is robust enough that it helps most of the time. Because the CPU can keep track of four different threads of execution in hardware, the software no longer has to perform as many heavy handed "context switches" between different processes that need CPU time, making overall system efficiency and responsiveness better. You may want to limit the number of concurrent threads such a hyperthreading-hostile application uses, though.

Saturday, June 11, 2011

Saturday, May 28, 2011

hi

hey

I recently found a very good site:<< www.google-elec.com >>It can offer you so many kinds of electronic products which you may be in need,such as- laptops, -gps, -TV, -cell phones, -ps, -MP3/4, -motorcycles even several kinds of musical instruments and etc..

its quality is very good. And the website is promoting their products these days, so they have very good price and big discount now. The promotion will keep 30 days .

I hope you have a good shopping mood!

Greetings!

Thursday, April 28, 2011

Perl code to print out differences between 2 files

Monday, September 6, 2010

Default seed of VCS can

In system verilog,if a packet class is created with rand items and every independent run with vcs generates the same values for rand items.

The above code having three 2-bit rand variables, when run with vcs command:

> vcs –sverilog –R test.sv

will output same value a=2, b=2, c=1 for all 10 iterations even when run again with the same above command. This is because, every vcs executes the test always with the default seed, which is equal to 1. And hence, randomize() function will always execute for constant seed (=1) in this process.

Utilizing the characteristics of rand type, the above code can be modified to dump different values of a, b, c in each iteration when run with the vcs command.

This will dump out unique values of a, b and c in each iteration.

The same can be run by changing RNG value for seeding from the command line using +ntb_random_seed. For running regression this is the best solution.

Friday, August 20, 2010

fork…join_none and for loop

An interesting case with fork…join_none block defined inside a for loop in system verilog is shown here as an example:

The intended result of this code is to display the iteration count value, i, from 0 to 3. That is, each call to system task, $display, will hold a unique value of i.

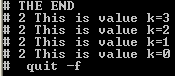

But the output of the above example is:

This can be explained as: Each iteration in the for loop initiates a parallel thread to display the value of variable i (iteration count). Because of join_none keyword, none of the threads (blocks) are waited for completion of their execution. So the immediately next statement after the for loop (zero time delay) is executed, which in this case is $display (“THE END”) system task and hence is the first line of output observed.

By the end of execution of for loop (zero time), the value of i is 4. This is the value that gets updated across all the threads (blocks) that has been initiated to display the value of i. Even before the threads begin to start executing the value of i has been changed to 4, which is what is observed in the output. This result implies that variable i is a static variable.

For this particular example replacing join_none by join statement will result into the expected output. That is, each parallel thread will display unique value of i and the output seen is i changing in consecutive order from 0 to 3.

In case join_none statement cannot be replaced because, say for example, of time consuming user defined task being called and the logic requires that no time is to be spent before executing the next statement following the for loop, then create an automatic variable inside a for loop and assign i to it before starting the fork…join_none block.

Here, keyword automatic can be ignored as well. Variable k is roughly similar to C automatic variable. Now, the lifetime of automatic variable k is the lifetime of each fork...join_none block. So each block will have a unique value of k, which doesn’t get changed even after completion of for loop.

Wednesday, March 3, 2010

Part Select or Slice implementation in SV

To optimize the following system verilog code:

sample[0] = &dq[7:0];

sample[1] = &dq[15:8];

sample[2] = &dq[23:16];

sample[3] = &dq[31:24];

...

so on for 64-bit packed array dq. This was simplified by using for loop with system verilog slice or part select of packed arrays as:

for (int i=0,j=0; i<ST_W; i+=8, j++) begin //ST_W=DATA_WIDTH/16

sample[j] = &dq[i+:8];

end

The index i represents position of the slicing which is added to a constant 8 to determine the size of part select or slice. Thus, the code can be reused for any data width with reduced code density and easy readability.